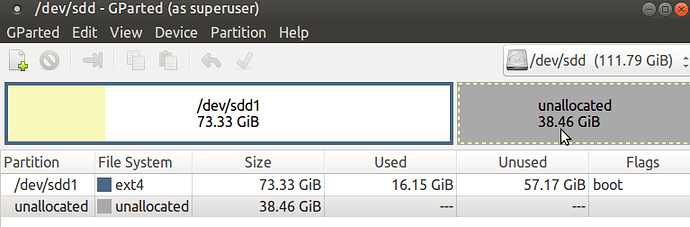

Your observation about the UUID’s being the same is right on the money, as Linux wants UUID’s to be UNIQUE – so the duplication can indeed keep the backup from mounting from an identical UUID MATE 16.04 installation as you were trying to do

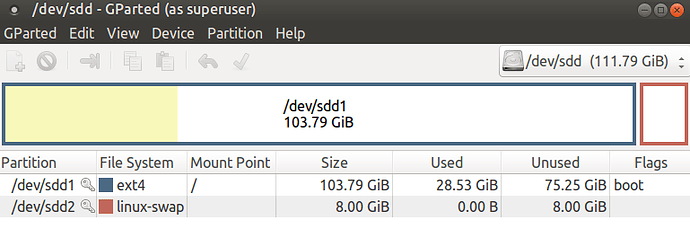

– that said, you should still be able to reverse the boot order in your BIOS – in which case the situation should reverse – which would mean that, after booting from the 110GB drive, you would find that MATE would then refuse to mount the 73GB MATE partition on the 220GB drive.

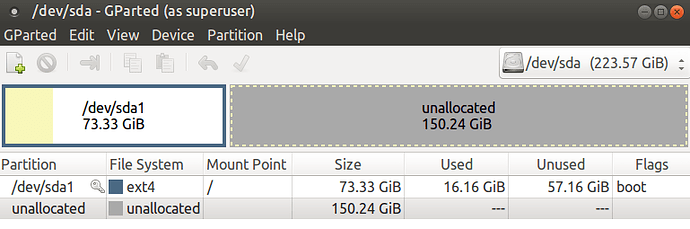

I did not suggest trying to change the UUID’s on the copied 16.04 drive, since this creates other complications, and any conflict will go away later on its own after you verify that your copied 16.04 on your 110GB can boot on it’s own correctly, and blow away the original 73GB partition on your 220GB drive, because when you re-partition that drive, and install 18.04 WITH A NEW PARTITION TABLE AND NEW PARTITIONS (which will have NEW UUIDs) it will eliminate the duplicate UUID conflict.

– does this sound right to you? ( I assumed that you wanted to recover the space on your 220GB drive and re-partition and install 18.04 there – is that right?).

SO THE FIRST ORDER OF BUSINESS IS TO MAKE SURE THAT YOUR COPIED 16.04 MATE INSTALLATION DID BACKUP CORRECTLY, AND WILL BOOT PROPERLY FROM THE 110GB DRIVE.

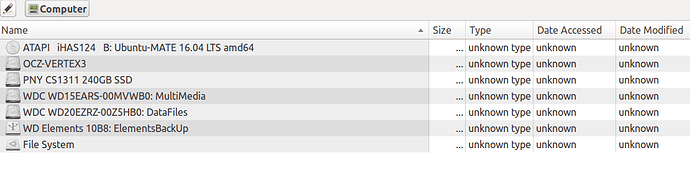

As I noted above, the duplicate UUID’s should not keep you from reversing the boot order in your BIOS and booting from the 110GB drive (which should now have an identical GRUB boot sector, partition table, and first 73GB partition) – but just in case your BIOS or MATE is still getting confused in some way, have you tried temporarily completely disabling your 220GB drive in the BIOS so that Linux will think that it has a single 110GB drive which is sda?

If you can’t get the 73GB MATE partition on the 110GB drive to boot up properly no matter what, even after trying this, then something obviously went wrong with the copy operation, so here are a few simple questions:

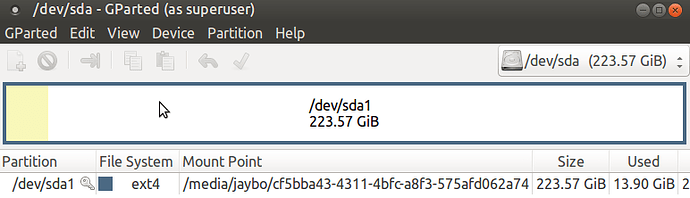

What was the actual dd copy command that you used? – Most importantly, did you copy the full base device to base device i.e. sda, to sdb, sdc, sdd, etc. – or did you only copy partitions i.e sda1, sdb1, sdc1, etc???

To preserve the boot sector in the copy we need to copy base device to base device (sda, sdb, sdc, sdd), with the upper bound of the dd copy set high enough to guarantee that we get EVERYTHING in your current MATE 16.04 (when calculating the minimum upper bound don’t forget the difference between GB = 1,000,000,000 vs GB=1024x1024x1024).

Also, although I mentioned that you should copy using a live session, one thing I assumed, but should probably have stated explicitly, was to also make sure that whatever devices your 220GB and 110GB drives are mapped to (sda, sdb, etc) are all UNMOUNTED in your LIVE SESSION while you are doing the copy (you can check this with the mount command from a terminal, or more easily by using the “disks” GUI application from the preferences/hardware/disks menu.)

This ability to have everything UNMOUNTED is the whole reason we use a LIVE SESSION in the first place.

Sorry, should have noted this, but I have done this so many times, it’s tricky to remember all the little details that snag you up first time through.

EDIT:

If you get everything sorted out, so your 16.04 is booting correctly from the 110GB drive, you can eliminate the unneeded Ubuntu 12.04 entry in the 16.04 GRUB boot menu by running:

sudo update-grub

When you install 18.04, it will install it’s own new copy of GRUB on the 220GB drive boot sector, and as part of that process, it should automatically detect your 16.04 MATE install on the 110GB drive.

This should happen even if you don’t bother to sort out the GRUB bootloader issues on 110GB 16.04 MATE installation, but getting all the boot issues sorted out for the copied 16.04 MATE installation is still worthwhile though, since it will give confidence that the copy operation was completed without errors, and will allow the 110GB drive to boot on it’s own at some future date, if your 220GB drive has a failure.